Vibe Networking

Leveraging the Natural Language Processing Capabilities of LLMs to Generate and Troubleshoot Networking Lab Environments in Cisco Modeling Labs

John Stephens

5/26/202511 min read

Vibe Coding to Vibe Networking

If you have spent any time of X following AI creators you have probably heard the term 'vibe coding'. If not, it is "where you fully give in to the vibes, embrace exponentials, and forget that the code even exists." No-code approaches seem to be really taking off and creators are building everything from integrations for popular software solutions to multi-player games and they're doing it by describing what they are hoping to see and allowing AI to do the rest. I wanted to get in on the fun and the mission of this blog is to demonstrate practical uses for AI in the networking and security space so the ability to use natural language to describe a network and allow an LLM to execute on the vision seemed like a worthwhile project. It took some time and a lot of trying to "show" Claude what I was doing in the API explorer but...

It Works!

The original video is around 5 minutes long. Here is the prompt that I used:

"I would like you to generate a lab that will help me understand BGP communities. Please create a lab with 5 routers. The routers should use at least 5 null routes each to advertise routes to peers. Communities should be created based on the routes originate. Please also create route maps to demonstrate inbound and outbound filtering as well."

What is not shown here was me providing the CML URL, Username, Password, and letting Claude know that there would be a certificate error to work around.

There's also no verification of the lab so whether or not it actually works is a bit of a mystery. We'll get to validation and remediation a bit later on.

That said, it is a (perhaps partially) working network lab environment complete with dynamic routing and route filtering in less than 5 minutes. I didn't have to provide IP addressing, AS numbers, community IDs, etc. I just gave Claude a high-level idea of what I'm looking for and watched the magic happen.

I Make Vibe Coding Look Difficult

Most of the time that I spent working on Claude Modeling Labs I spent with Claude. I also tried to work with Cursor, but I have not been able to spend enough time to enjoy the same kind of working relationship. Claude isn't perfect, but I like to think that we work together well. I also spent some time with Cline inside of VS Code as well and I think that there is a lot of promise there, but open time has been tough to come by lately so Claude has been my go-to. Not having any roadmap for how to get this done I stumbled through what I thought would work as well as a lot of other techniques. I will step through some of the stuff that I learned along the way and while I'm sure at least some of the techniques are outdated, hopefully they at least provide some ideas to run by your favorite AI ally to create your own MCP server.

Context Is Everything

When working with Claude (or any LLM), I quickly discovered that context is absolutely everything. The more relevant context you can provide, the better the results you'll get. This became especially apparent when developing the Claude Modeling Labs MCP server.

Using Repomix for Codebase Context

One of the most useful strategies I found was using Repomix to provide Claude with the entire FastMCP codebase as context for configuration and troubleshooting. This meant Claude had a comprehensive understanding of the existing code structure, making its suggestions and troubleshooting far more accurate.

Rather than Claude trying to guess how certain components might work, it could reference the actual implementation details. This dramatically reduced the number of iterations required to get things working correctly.

I tried just providing Claude with the readme from the FastMCP repo, but that was not enough to produce code that would result in successful installation of the server to Claude Desktop. Using Repomix to provide the FastMCP codebase to Claude worked like a charm. I was so excited about finally overcoming this obstacle that I tried to develop some of the tools that I wanted to use in the same chat and that is when things started to get weird. That's where I learned that when the issue is resolved, it is usually best to start a new chat.

Different Chats for Different Problems

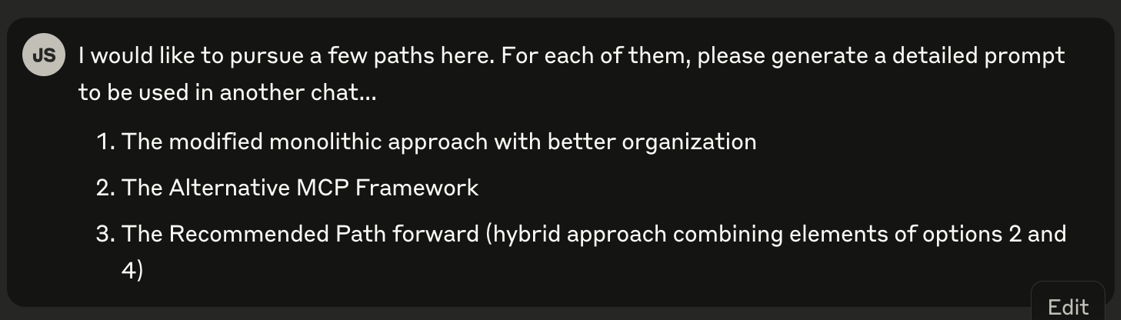

If I was going to try to do this project over again, this is a rule that I would very strictly adhere to. Not just because the context window doesn't always handle tangents very well, but because longer chats are more costly and performance seems to decline with longer chats. If it takes some time to get to the bottom of a specific issue and your LLM buddy is staying on track, by all means keep the party going, but if you've achieved what you were hoping to achieve in the chat you should consider moving on. There were at least a couple times where the chat was getting long and I was still not where I wanted to be and that is generally where I asked Claude to create a prompt for the next chat. In my request I would ask Claude to include any lessons learned along with what we are trying to achieve and any logging information relevant to the issue I was trying to resolve. You might have to modify a thing or two, but at the very least you have a good start for the next chat. Here is an example I took from a chat late in the process. I was running into some issues that I will go into more detail later... all of the configuration was contained in one file and that file was getting too long for Claude to be able to handle without some buggy behavior. I tried a more modular approach, but couldn't get it to work correctly on Windows machines so Claude provided some options...

"Recommended Path Forward

I recommend a hybrid approach combining elements of Options 2 and 4:

Create a Simple Core MCP Server: Keep a single file for MCP protocol handling that Claude can easily work with.

Implement an Explicit Registration System: Instead of automatic discovery, have tool modules explicitly register with the server.

Use Composition Over Inheritance: Build a system where tools are composed together rather than inherited from base classes.

Centralized Tool Registry: Maintain a central registry that maps tool names to functions."

My Prompt Request:

The First Prompt Generated:

I need help refactoring my Cisco Modeling Labs (CML) Model Context Protocol (MCP) server to maintain a monolithic structure for compatibility but with better internal organization.

Currently, my CML MCP server is contained in a single file that works correctly with the MCP framework but is difficult to maintain and extend. Due to technical constraints with the MCP framework, I need to keep everything in a single file, but I want to reorganize the code to be more maintainable.

Here's what I'd like help with:

1. Reorganizing the code using classes to group related functionality

2. Using nested functions to encapsulate behaviors

3. Implementing clear separation of concerns within the single file

4. Adding comprehensive documentation and comments

5. Ensuring the refactored code maintains full compatibility with the MCP framework

Key requirements:

- The server must still export a global 'run()' function for MCP integration

- All current tools and functionality must be preserved

- The code should be organized into logical sections (auth, API client, tools, MCP handlers)

- Each tool category should have its own class

- The code should follow best practices for a large Python file

Could you help me create a well-organized monolithic MCP server file that maintains all current functionality but is easier to understand and extend?

While this approach is far from a guarantee that the next chat will be where your issue is resolved, it has proven to be a good starting point.

Less Vibes, More Visuals

I feel like this might have been breaking the vibe coding rules, but sometimes I did actually do a very small amount of the work myself and provide Claude with the context required to create tools successfully. In this case that meant working through the CML Swagger UI to execute successful API calls and then share screenshots with Claude in an effort to create tools for leveraging the API that actually worked. This was before web search was built-in and also before I became aware of browser-use. I haven't tested it, but I imagine browser-use tools would allow Claude to leverage the Swagger UI and figure out how to craft the appropriate API calls for the desired tools. Anyway, I did it manually and that allowed me to overcome many of the challenges that I experienced in getting the main Claude Modeling Labs tools built (creating nodes, creating links between nodes, ensuring that nodes were deployed with enough interfaces, etc). This was probably one of the few times that I didn't feel like I was a toddler clumsily dragging Claude by the hand to the thing that I was trying to manipulate and couldn't.

So I learned a few things, showed Claude a few things and ended up with an MCP server that could generate a lab environment in Cisco Modeling Labs in 5 minutes or so. Not bad, but not something that I would call "Vibe Networking". Without an interactive component to this it feels more like "Vibe Lab Building" which doesn't sound nearly as cool. I thought I could simply leverage the Runtime API endpoints to do the normal stuff that I would do if I was connected to the console of a network appliance. Turns out it doesn't work that way. I needed to find another solution. That is where iterm-mcp came in.

Vibe Troubleshooting?

The video in near the beginning of this post shows Claude successfully creating a lab environment in Cisco Modeling Labs based on a high-level explanation of what I was trying to achieve. Verification of that lab environment was not shown. Mostly because I didn't have iterm-mcp at the time and I didn't want to bore everyone with identifying and troubleshooting any issues that were found. Much more on theme to just have Claude verify that the lab is configured and working as intended. Since this is not something that I could do using the Runtime API endpoints, I needed a way to give Claude access to a terminal emulator. Luckily, I didn't have to build this one. Just install this one: https://github.com/ferrislucas/iterm-mcp ...or so I thought.

Installation of the MCP server was easy and I was able to get Claude connected to one of the nodes in a lab that I was working on, but the implementation at the time did not support the use of the Telnet Escape Sequence (Command+]). To work around this I forked the repo and added in support. That has since been committed to the main branch and is supported if you clone that repo today.

With Telnet Escape Sequence support I could now have Claude connect to the CML console server and jump between nodes as needed to troubleshoot and resolve configuration issues. That meant that it was time to ratchet up the difficulty a bit.

Show Me What You Got

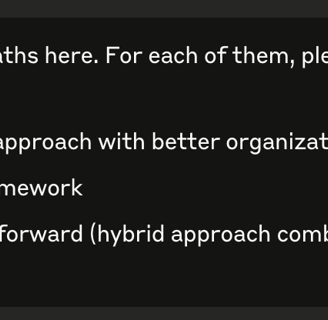

The Lab: What We Built

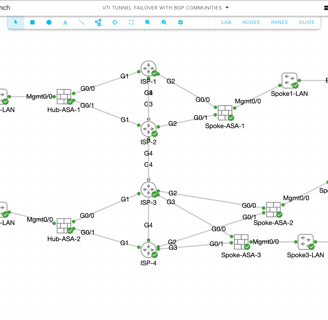

To demonstrate the capabilities of the Claude Modeling Labs MCP server, we created a comprehensive lab environment showcasing VTI (Virtual Tunnel Interface) tunnels with failover capabilities using BGP communities for route manipulation.

Our setup included:

5 Cisco ASA nodes (2 hubs and 3 spokes)

4 ISP routers providing dual ISP connections for each ASA

Internal LAN segments with client machines for testing

Using this topology, we were able to demonstrate some pretty advanced networking concepts:

BGP path selection using communities (65100:100 and 65100:200)

Multi-path VPN tunnels with automatic failover

Route redistribution and manipulation

While we encountered some hiccups along the way (particularly with the console access and tunnel configuration), the final result was a fully functional network that demonstrated how the Claude Modeling Labs MCP server can be used for complex network design and troubleshooting.

Validation

TL;DW

The above video shows Claude working through the Cisco Modeling Labs console server to troubleshoot from various lab nodes. While the video doesn't include Claude making it all the way through to the goal of validating successful failover from one VTI tunnel to another and back in one prompt, we do see:

Claude seamlessly moving from the console of one node to another using show commands to understand the status of the lab

Claude addressing some of the issues that were encountered (ISP node interfaces were down)

Claude summarizing findings and suggesting next steps for resolution of the remaining issues

What You Didn't See

As much as I believe that this is something to be excited about, it isn't perfect. It did take a few more prompts to get to the point that the lab was fully configured and there were some minor issues along the way:

Claude stated that VTI tunnel failover was working even though parts of the VTI tunnel configuration were missing (resolved in the next prompt)

Performance was a bit slow at times (probably because I have too many MCP servers installed and running at the same time)

Claude seemed to need a couple of attempts to configure a valid enable password (just funny as Claude nailed much more difficult stuff)

Is Claude Gonna Take Our Network Jerbs?

No. Well, not immediately, anyway. I think that there are a number of reasons that we aren't going to see networking jobs disappear because some competent-ish network engineer gave Claude access to a terminal and asked for some network things to be done. I imagine that chief among them is privacy. It seems unlikely that any enterprise is going to be cool with having their production networks configured by a public AI model. That said, it doesn't seem unreasonable to expect that some will explore how they can get something like this done with a model that they are hosting and feel that they have secured. It's also not unreasonable to think that you could be that person. I vibe coded this. No excuses. Get out there and build!

BONUS!

If you're still here, thank you. Here's a small bonus to express my gratitude for hanging in there:

Vibe Learning

While developing this solution and keeping up with developments in the Model Context Protocol space I spent a lot of time thinking about different use cases and how the solution could be extended. One of the use cases that I found myself gravitating towards was training. I spend a lot of time on training platforms to stay sharp. Some platforms are better than others, but pretty much all of them offer a very linear experience. You learn about a thing and then maybe you do some labs where you check the right boxes and fill in the correct values in a text field or whatever until you have configured the thing and now you are ready to take the exam that probably doesn't resemble the training that you completed at all. Fun!

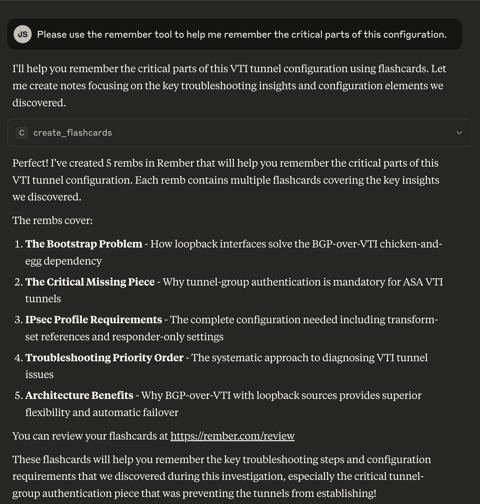

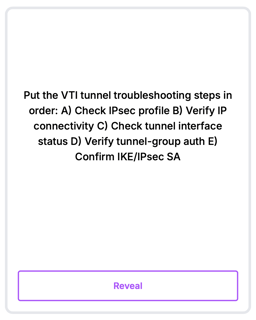

What if instead you could tell Claude that you are interested in understanding a given topic, explore it with Claude, and then have Claude create flashcards for you to remember what you learned? MacOS users can do that easily by installing Remember through the Fleur MCP App Store and asking Claude to create flashcards for you!

Insights

Sharing network security solutions for community improvement.

Connect

Engage

Discord: @mediocretriumph

Email: john@triumphtips.com

© 2024. All rights reserved.